You are looking at the documentation of a prior release. To read the documentation of the latest release, please

visit here.

KubeDB

Run Production-Grade Databases on Kubernetes

arrow_forwardStash

Backup and Recovery Solution for Kubernetes

arrow_forwardKubeVault

Run Production-Grade Vault on Kubernetes

arrow_forwardVoyager

Secure Ingress Controller for Kubernetes

arrow_forwardConfigSyncer

Kubernetes Configuration Syncer

arrow_forwardGuard

Kubernetes Authentication WebHook Server

arrow_forwardKubeDB simplifies Provisioning, Upgrading, Scaling, Volume Expansion, Monitor, Backup, Restore for various Databases in Kubernetes on any Public & Private Cloud

- task_altLower administrative burden

- task_altNative Kubernetes Support

- task_altPerformance

- task_altAvailability and durability

- task_altManageability

- task_altCost-effectiveness

- task_altSecurity

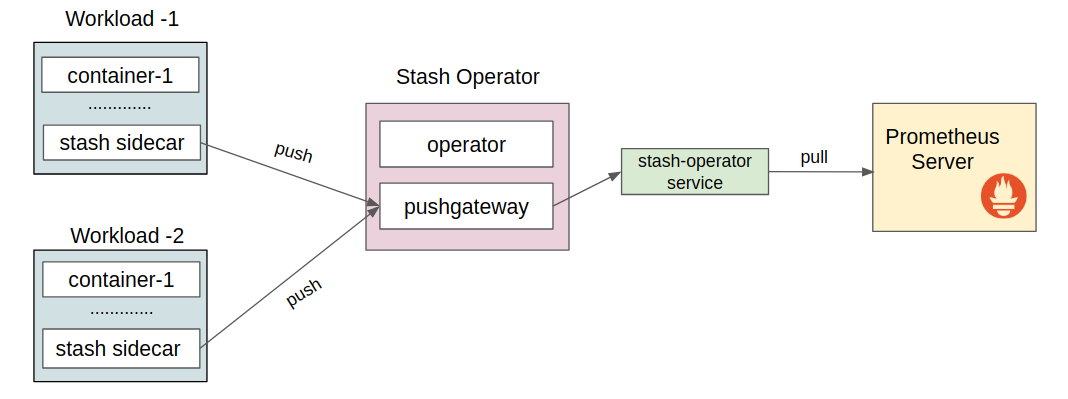

A complete Kubernetes native disaster recovery solution for backup and restore your volumes and databases in Kubernetes on any public and private clouds.

- task_altDeclarative API

- task_altBackup Kubernetes Volumes

- task_altBackup Database

- task_altMultiple Storage Support

- task_altDeduplication

- task_altData Encryption

- task_altVolume Snapshot

- task_altPolicy Based Backup

KubeVault is a Git-Ops ready, production-grade solution for deploying and configuring Hashicorp's Vault on Kubernetes.

- task_altVault Kubernetes Deployment

- task_altAuto Initialization & Unsealing

- task_altVault Backup & Restore

- task_altConsume KubeVault Secrets with CSI

- task_altManage DB Users Privileges

- task_altStorage Backend

- task_altAuthentication Method

- task_altDatabase Secret Engine

Secure Ingress Controller for Kubernetes

- task_altHTTP & TCP

- task_altSSL

- task_altPlatform support

- task_altHAProxy

- task_altPrometheus

- task_altLet's Encrypt

Kubernetes Configuration Syncer

- task_altConfiguration Syncer

Kubernetes Authentication WebHook Server

- task_altIdentity Providers

- task_altCLI

- task_altRBAC

- Webinar New

master

v2024.4.8

Latest

v2024.2.13

v2023.10.9

v2023.08.18

v2023.05.31

v2023.04.30

v2023.03.20

v2023.03.13

v2023.02.28

v2023.01.05

v2022.12.11

v2022.09.29

v2022.07.09

v2022.06.27

v2022.06.21

v2022.05.18

v2022.05.12

v2022.03.29

v2022.02.22

v2021.11.24

v2021.10.11

v2021.08.02

v2021.06.23

v2021.6.18

v2021.04.12

v2021.03.17

v2021.01.21

v2020.12.17

v2020.11.17

v2020.11.06

v2020.10.30

v2020.10.29

v2020.10.21

v2020.09.29

v2020.09.16

v2020.08.27

v0.9.0-rc.6