You are looking at the documentation of a prior release. To read the documentation of the latest release, please

visit here.

New to Stash? Please start here.

Using Stash with Google Kubernetes Engine (GKE)

This tutorial will show you how to use Stash to backup and restore a Kubernetes deployment in Google Kubernetes Engine. Here, we are going to back up the /source/data folder of a busybox pod into GCS bucket. Then, we are going to show how to recover this data into a gcePersistentDisk and PersistentVolumeClaim. We are going to also re-deploy deployment using this recovered volume.

Before You Begin

At first, you need to have a Kubernetes cluster in Google Cloud Platform. If you don’t already have a cluster, you can create one from here.

Install Stash in your cluster following the steps here.

You should be familiar with the following Stash concepts:

You will need a GCS Bucket and GCE persistent disk. GCE persistent disk must be in the same GCE project and zone as the cluster.

To keep things isolated, we are going to use a separate namespace called demo throughout this tutorial.

$ kubectl create ns demo

namespace/demo created

Note: YAML files used in this tutorial are stored in /docs/examples/platforms/gke directory of stashed/docs repository.

Backup

In order to take backup, we need some sample data. Stash has some sample data in stash-data repository. As gitRepo volume has been deprecated, we are not going to use this repository as volume directly. Instead, we are going to create a configMap from the stash-data repository and use that ConfigMap as data source.

Let’s create a ConfigMap from these sample data,

$ kubectl create configmap -n demo stash-sample-data \

--from-literal=LICENSE="$(curl -fsSL https://github.com/stashed/stash-data/raw/master/LICENSE)" \

--from-literal=README.md="$(curl -fsSL https://github.com/stashed/stash-data/raw/master/README.md)"

configmap/stash-sample-data created

Deploy Workload:

Now, deploy the following Deployment. Here, we have mounted the ConfigMap stash-sample-data as data source volume.

Below, the YAML for the Deployment we are going to create.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: stash-demo

name: stash-demo

namespace: demo

spec:

replicas: 1

selector:

matchLabels:

app: stash-demo

template:

metadata:

labels:

app: stash-demo

name: busybox

spec:

containers:

- args:

- sleep

- "3600"

image: busybox

imagePullPolicy: IfNotPresent

name: busybox

volumeMounts:

- mountPath: /source/data

name: source-data

restartPolicy: Always

volumes:

- name: source-data

configMap:

name: stash-sample-data

Let’s create the deployment we have shown above,

$ kubectl apply -f https://github.com/stashed/docs/raw/v2021.03.08/docs/examples/platforms/gke/deployment.yaml

deployment.apps/stash-demo created

Now, wait for deployment’s pod to go in Running state.

$ kubectl get pods -n demo -l app=stash-demo

NAME READY STATUS RESTARTS AGE

stash-demo-b66b9cdfd-8s98d 1/1 Running 0 6m

You can check that the /source/data/ directory of pod is populated with data from the volume source using this command,

$ kubectl exec -n demo stash-demo-b66b9cdfd-8s98d -- ls -R /source/data/

/source/data:

LICENSE

README.md

Now, we are ready backup /source/data directory into a GCS bucket,

Create Secret:

At first, we need to create a storage secret that hold the credentials for the backend. To configure this backend, the following secret keys are needed:

| Key | Description |

|---|---|

RESTIC_PASSWORD | Required. Password used to encrypt snapshots by restic |

GOOGLE_PROJECT_ID | Required. Google Cloud project ID |

GOOGLE_SERVICE_ACCOUNT_JSON_KEY | Required. Google Cloud service account json key |

Create storage secret as below,

$ echo -n 'changeit' > RESTIC_PASSWORD

$ echo -n '<your-project-id>' > GOOGLE_PROJECT_ID

$ cat /path/to/downloaded-sa-json.key > GOOGLE_SERVICE_ACCOUNT_JSON_KEY

$ kubectl create secret generic -n demo gcs-secret \

--from-file=./RESTIC_PASSWORD \

--from-file=./GOOGLE_PROJECT_ID \

--from-file=./GOOGLE_SERVICE_ACCOUNT_JSON_KEY

secret "gcs-secret" created

Verify that the secret has been created successfully,

$ kubectl get secret -n demo gcs-secret -o yaml

apiVersion: v1

data:

GOOGLE_PROJECT_ID: dXNlIHlvdXIgb3duIGNyZWRlbnRpYWxz # <base64 encoded google project id>

GOOGLE_SERVICE_ACCOUNT_JSON_KEY: dXNlIHlvdXIgb3duIGNyZWRlbnRpYWxz # <base64 encoded google service account json key>

RESTIC_PASSWORD: Y2hhbmdlaXQ=

kind: Secret

metadata:

creationTimestamp: 2018-04-11T12:57:05Z

name: gcs-secret

namespace: demo

resourceVersion: "7113"

selfLink: /api/v1/namespaces/demo/secrets/gcs-secret

uid: d5e70521-3d87-11e8-a5b9-42010a800002

type: Opaque

Create Restic:

Now, we can create Restic crd. This will create a repository in the GCS bucket specified in gcs.bucket field and start taking periodic backup of /source/data directory.

$ kubectl apply -f https://github.com/stashed/docs/raw/v2021.03.08/docs/examples/platforms/gke/restic.yaml

restic.stash.appscode.com/gcs-restic created

Below, the YAML for Restic crd we have created above,

apiVersion: stash.appscode.com/v1alpha1

kind: Restic

metadata:

name: gcs-restic

namespace: demo

spec:

selector:

matchLabels:

app: stash-demo

fileGroups:

- path: /source/data

retentionPolicyName: 'keep-last-5'

backend:

gcs:

bucket: stash-backup-repo

prefix: demo

storageSecretName: gcs-secret

schedule: '@every 1m'

volumeMounts:

- mountPath: /source/data

name: source-data

retentionPolicies:

- name: 'keep-last-5'

keepLast: 5

prune: true

If everything goes well, Stash will inject a sidecar container into the stash-demo deployment to take periodic backup. Let’s check sidecar has been injected successfully,

$ kubectl get pod -n demo -l app=stash-demo

NAME READY STATUS RESTARTS AGE

stash-demo-6b8c94cdd7-8jhtn 2/2 Running 1 1h

Look at the pod. It now has 2 containers. If you view the resource definition of this pod, you will see there is a container named stash which running backup command.

Verify Backup:

Stash will create a Repository crd with name deployment.stash-demo for the respective repository in GCS backend. To verify, run the following command,

$ kubectl get repository deployment.stash-demo -n demo

NAME BACKUPCOUNT LASTSUCCESSFULBACKUP AGE

deployment.stash-demo 1 13s 1m

Here, BACKUPCOUNT field indicates number of backup snapshot has taken in this repository.

Restic will take backup of the volume periodically with a 1-minute interval. You can verify that backup is taking successfully by,

$ kubectl get snapshots -n demo -l repository=deployment.stash-demo

NAME AGE

deployment.stash-demo-c1014ca6 10s

Here, deployment.stash-demo-c1014ca6 represents the name of the successful backup Snapshot taken by Stash in deployment.stash-demo repository.

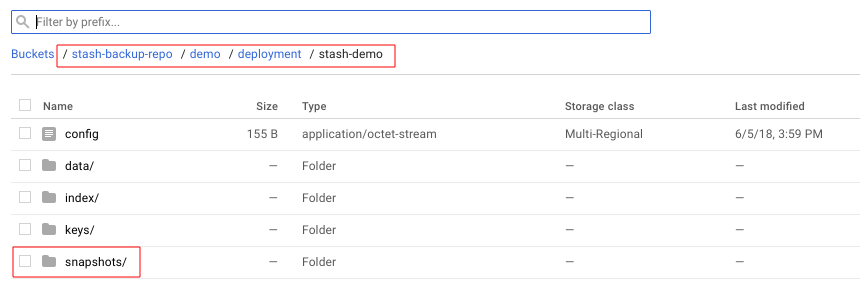

If you navigate to <bucket name>/demo/deployment/stash-demo directory in your GCS bucket. You will see, a repository has been created there.

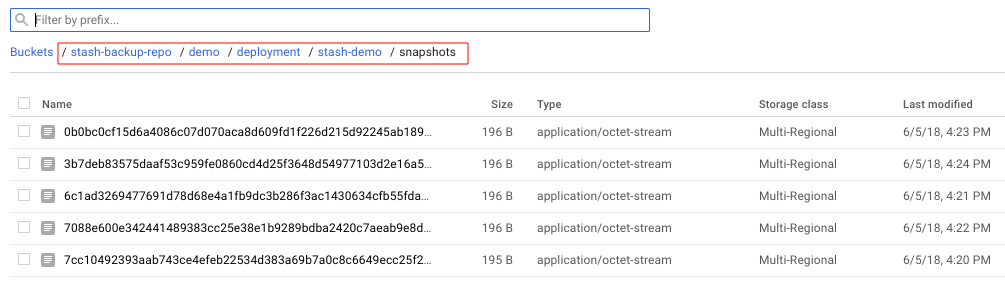

To view the snapshot files, navigate to snapshots directory of the repository,

Stash keeps all backup data encrypted. So, snapshot files in the bucket will not contain any meaningful data until they are decrypted.

Recovery

Now, consider that we have lost our workload as well as data volume. We want to recover the data into a new volume and re-deploy the workload. In this section, we are going to see how to recover data into a gcePersistentDisk and persistentVolumeClaim.

At first, let’s delete Restic crd, stash-demo deployment and stash-sample-data ConfigMap.

$ kubectl delete deployment -n demo stash-demo

deployment.extensions "stash-demo" deleted

$ kubectl delete restic -n demo gcs-restic

restic.stash.appscode.com "gcs-restic" deleted

$ kubectl delete configmap -n demo stash-sample-data

configmap "stash-sample-data" deleted

In order to perform recovery, we need Repository crd deployment.stah-demo and backend secret gcs-secret to exist.

In case of cluster disaster, you might lose

Repositorycrd and backend secret. In this scenario, you have to create the secret again andRepositorycrd manually. Follow the guide to understandRepositorycrd structure from here.

Recover to GCE Persistent Disk

Now, we are going to recover the backed up data into GCE Persistent Disk. At first, create a GCE disk named stash-recovered from Google cloud console. Then create Recovery crd,

$ kubectl apply -f https://github.com/stashed/docs/raw/v2021.03.08/docs/examples/platforms/gke/recovery-gcePD.yaml

recovery.stash.appscode.com/gcs-recovery created

Below, the YAML for Recovery crd we have created above.

apiVersion: stash.appscode.com/v1alpha1

kind: Recovery

metadata:

name: gcs-recovery

namespace: demo

spec:

repository:

name: deployment.stash-demo

namespace: demo

paths:

- /source/data

recoveredVolumes:

- mountPath: /source/data

gcePersistentDisk:

pdName: stash-recovered

fsType: ext4

Wait until Recovery job completes its task. To verify that recovery has completed successfully run,

$ kubectl get recovery -n demo gcs-recovery

NAME REPOSITORYNAMESPACE REPOSITORYNAME SNAPSHOT PHASE AGE

gcs-recovery demo deployment.stash-demo Succeeded 3m

Here, PHASE Succeeded indicate that our recovery has been completed successfully. Backup data has been restored in stash-recovered Persistent Disk. Now, we are ready to use this Persistent Disk to re-deploy workload.

If you are using Kubernetes version older than v1.11.0 then run following command and check status.phase field to see whether the recovery succeeded or failed.

$ kubectl get recovery -n demo gcs-recovery -o yaml

Re-deploy Workload:

We have successfully restored backup data into stash-recovered gcePersistentDisk. Now, we are going to re-deploy our previous deployment stash-demo. This time, we are going to mount the stash-recovered Persistent Disk as source-data volume instead of ConfigMap stash-sample-data.

Below, the YAML for stash-demo deployment with stash-recovered persistent disk as source-data volume.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: stash-demo

name: stash-demo

namespace: demo

spec:

replicas: 1

selector:

matchLabels:

app: stash-demo

template:

metadata:

labels:

app: stash-demo

name: busybox

spec:

containers:

- args:

- sleep

- "3600"

image: busybox

imagePullPolicy: IfNotPresent

name: busybox

volumeMounts:

- mountPath: /source/data

name: source-data

restartPolicy: Always

volumes:

- name: source-data

gcePersistentDisk:

pdName: stash-recovered

fsType: ext4

Let’s create the deployment,

$ kubectl apply -f https://github.com/stashed/docs/raw/v2021.03.08/docs/examples/platforms/gke/restored-deployment-gcePD.yaml

deployment.apps/stash-demo created

Verify Recovered Data:

We have re-deployed stash-demo deployment with recovered volume. Now, it is time to verify that the data are present in /source/data directory.

Get the pod of new deployment,

$ kubectl get pods -n demo -l app=stash-demo

NAME READY STATUS RESTARTS AGE

stash-demo-857995799-gpml9 1/1 Running 0 34s

Run following command to view data of /source/data directory of this pod,

$ kubectl exec -n demo stash-demo-857995799-gpml9 -- ls -R /source/data

/source/data:

LICENSE

README.md

lost+found

/source/data/lost+found:

So, we can see that the data we had backed up from original deployment are now present in re-deployed deployment.

Recover to PersistentVolumeClaim

Here, we are going to show how to recover the backed up data into a PVC. If you have re-deployed stash-demo deployment by following previous tutorial on gcePersistentDisk, delete the deployment first,

$ kubectl delete deployment -n demo stash-demo

deployment.apps/stash-demo deleted

Now, create a PersistentVolumeClaim where our recovered data will be stored.

$ kubectl apply -f https://github.com/stashed/docs/raw/v2021.03.08/docs/examples/platforms/gke/pvc.yaml

persistentvolumeclaim/stash-recovered created

Below the YAML for PersistentVolumeClaim we have created above,

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: stash-recovered

namespace: demo

labels:

app: stash-demo

spec:

storageClassName: standard

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

Check that if cluster has provisioned the requested claim,

$ kubectl get pvc -n demo -l app=stash-demo

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

stash-recovered Bound pvc-57bec6e5-3e11-11e8-951b-42010a80002e 2Gi RWO standard 1m

Look at the STATUS filed. stash-recovered PVC is bounded to volume pvc-57bec6e5-3e11-11e8-951b-42010a80002e.

Create Recovery:

Now, we have to create a Recovery crd to recover backed up data into this PVC.

$ kubectl apply -f https://github.com/stashed/docs/raw/v2021.03.08/docs/examples/platforms/gke/recovery-pvc.yaml

recovery.stash.appscode.com/gcs-recovery created

Below, the YAML for Recovery crd we have created above.

apiVersion: stash.appscode.com/v1alpha1

kind: Recovery

metadata:

name: gcs-recovery

namespace: demo

spec:

repository:

name: deployment.stash-demo

namespace: demo

paths:

- /source/data

recoveredVolumes:

- mountPath: /source/data

persistentVolumeClaim:

claimName: stash-recovered

Wait until Recovery job completes its task. To verify that recovery has completed successfully run,

$ kubectl get recovery -n demo gcs-recovery

NAME REPOSITORYNAMESPACE REPOSITORYNAME SNAPSHOT PHASE AGE

gcs-recovery demo deployment.stash-demo Succeeded 3m

Here, PHASE Succeeded indicate that our recovery has been completed successfully. Backup data has been restored in stash-recovered PVC. Now, we are ready to use this PVC to re-deploy workload.

Re-deploy Workload:

We have successfully restored backup data into stash-recovered PVC. Now, we are going to re-deploy our previous deployment stash-demo. This time, we are going to mount the stash-recovered PVC as source-data volume instead of ConfigMap stash-sample-data.

Below, the YAML for stash-demo deployment with stash-recovered PVC as source-data volume.

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: stash-demo

name: stash-demo

namespace: demo

spec:

replicas: 1

selector:

matchLabels:

app: stash-demo

template:

metadata:

labels:

app: stash-demo

name: busybox

spec:

containers:

- args:

- sleep

- "3600"

image: busybox

imagePullPolicy: IfNotPresent

name: busybox

volumeMounts:

- mountPath: /source/data

name: source-data

restartPolicy: Always

volumes:

- name: source-data

persistentVolumeClaim:

claimName: stash-recovered

Let’s create the deployment,

$ kubectl apply -f https://github.com/stashed/docs/raw/v2021.03.08/docs/examples/platforms/gke/restored-deployment-pvc.yaml

deployment.apps/stash-demo created

Verify Recovered Data:

We have re-deployed stash-demo deployment with recovered volume. Now, it is time to verify that the data are present in /source/data directory.

Get the pod of new deployment,

$ kubectl get pods -n demo -l app=stash-demo

NAME READY STATUS RESTARTS AGE

stash-demo-559845c5db-8cd4w 1/1 Running 0 33s

Run following command to view data of /source/data directory of this pod,

$ kubectl exec -n demo stash-demo-559845c5db-8cd4w -- ls -R /source/data

/source/data:

LICENSE

README.md

lost+found

/source/data/lost+found:

So, we can see that the data we had backed up from original deployment are now present in re-deployed deployment.

Cleanup

To cleanup the resources created by this tutorial, run following commands:

$ kubectl delete recovery -n demo gcs-recovery

$ kubectl delete secret -n demo gcs-secret

$ kubectl delete deployment -n demo stash-demo

$ kubectl delete pvc -n demo stash-recovered

$ kubectl delete repository -n demo deployment.stash-demo

$ kubectl delete ns demo

- To uninstall Stash from your cluster, follow the instructions from here.