You are looking at the documentation of a prior release. To read the documentation of the latest release, please

visit here.

Snapshotting a Standalone PVC

This guide will show you how to use Stash to snapshot standalone PersistentVolumeClaims and restore it from the snapshot using Kubernetes VolumeSnapshot API. In this guide, we are going to backup the volumes in Google Cloud Platform with the help of GCE Persistent Disk CSI Driver.

Before You Begin

- At first, you need to be familiar with the GCE Persistent Disk CSI Driver.

- Install

Stashin your cluster following the steps here. - If you don’t know how VolumeSnapshot works in Stash, please visit here.

Prepare for VolumeSnapshot

Here, we are going to create StorageClass that uses GCE Persistent Disk CSI Driver.

Below is the YAML of the StorageClass we are going to use,

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-standard

parameters:

type: pd-standard

provisioner: pd.csi.storage.gke.io

reclaimPolicy: Delete

volumeBindingMode: Immediate

Let’s create the StorageClass we have shown above,

$ kubectl apply -f https://github.com/stashed/docs/raw/v2025.6.30/docs/guides/volumesnapshot/pvc/examples/storageclass.yaml

storageclass.storage.k8s.io/csi-standard created

We also need a VolumeSnapshotClass. Below is the YAML of the VolumeSnapshotClass we are going to use,

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshotClass

metadata:

name: csi-snapshot-class

driver: pd.csi.storage.gke.io

deletionPolicy: Delete

Here,

driverfield to point to the respective CSI driver that is responsible for taking snapshot. As we are using GCE Persistent Disk CSI Driver, we are going to usepd.csi.storage.gke.ioin this field.

Let’s create the volumeSnapshotClass we have shown above,

$ kubectl apply -f https://github.com/stashed/docs/raw/v2025.6.30/docs/guides/volumesnapshot/pvc/examples/volumesnapshotclass.yaml

volumesnapshotclass.snapshot.storage.k8s.io/csi-snapshot-class created

To keep everything isolated, we are going to use a separate namespace called demo throughout this tutorial.

$ kubectl create ns demo

namespace/demo created

Note: YAML files used in this tutorial are stored in /docs/guides/volumesnapshot/pvc/examples directory of stashed/docs repository.

Take Volume Snapshot

Here, we are going to create a PVC and mount it with a pod and we are going to also generate some sample data on it. Then, we are going to take snapshot of this PVC using Stash.

Create PersistentVolumeClaim :

At first, let’s create a PVC. We are going to mount this PVC in a pod.

Below is the YAML of the sample PVC,

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: source-data

namespace: demo

spec:

accessModes:

- ReadWriteOnce

storageClassName: standard

resources:

requests:

storage: 1Gi

Let’s create the PVC we have shown above.

$ kubectl apply -f https://github.com/stashed/docs/raw/v2025.6.30/docs/guides/volumesnapshot/pvc/examples/source-pvc.yaml

persistentvolumeclaim/source-data created

Create Pod :

Now, we are going to deploy a pod that uses the above PVC. This pod will automatically create data.txt file in /source/data directory and write some sample data in it and also mounted the desired PVC in /source/data directory.

Below is the YAML of the pod that we are going to create,

apiVersion: v1

kind: Pod

metadata:

name: source-pod

namespace: demo

spec:

containers:

- name: busybox

image: busybox

command: ["/bin/sh", "-c"]

args: ["echo sample_data > /source/data/data.txt && sleep 3000"]

volumeMounts:

- name: source-data

mountPath: /source/data

volumes:

- name: source-data

persistentVolumeClaim:

claimName: source-data

readOnly: false

Let’s create the Pod we have shown above.

$ kubectl apply -f https://github.com/stashed/docs/raw/v2025.6.30/docs/guides/volumesnapshot/pvc/examples/source-pod.yaml

pod/source-pod created

Now, wait for the Pod to go into the Running state.

$ kubectl get pod -n demo

NAME READY STATUS RESTARTS AGE

source-pod 1/1 Running 0 25s

Verify that the sample data has been created in /source/data directory for source-pod pod

using the following command,

$ kubectl exec -n demo source-pod -- cat /source/data/data.txt

sample_data

Create BackupConfiguration :

Now, create a BackupConfiguration crd to take snapshot of the source-data PVC.

Below is the YAML of the BackupConfiguration crd that we are going to create,

apiVersion: stash.appscode.com/v1beta1

kind: BackupConfiguration

metadata:

name: pvc-volume-snapshot

namespace: demo

spec:

schedule: "*/5 * * * *"

driver: VolumeSnapshotter

target:

ref:

apiVersion: v1

kind: PersistentVolumeClaim

name: source-data

snapshotClassName: csi-snapshot-class

retentionPolicy:

name: 'keep-last-5'

keepLast: 5

prune: true

Here,

spec.scheduleis a cron expression indicates thatBackupSessionwill be created at 5 minute interval.spec.driverindicates the name of the agent to use to back up the target. Currently, Stash supportsRestic,VolumeSnapshotterdrivers. TheVolumeSnapshotteris used to backup/restore PVC usingVolumeSnapshotAPI.spec.target.refrefers to the backup target.apiVersion,kindandnamerefers to theapiVersion,kindandnameof the targeted workload respectively. Stash will use this information to create a Volume Snapshotter Job for creating VolumeSnapshot.spec.target.snapshotClassNameindicates the VolumeSnapshotClass to be used for volume snapshotting.

Let’s create the BackupConfiguration crd we have shown above.

$ kubectl apply -f https://github.com/stashed/docs/raw/v2025.6.30/docs/guides/volumesnapshot/pvc/examples/backupconfiguration.yaml

backupconfiguration.stash.appscode.com/pvc-volume-snapshot created

Verify CronJob :

If everything goes well, Stash will create a CronJob to take periodic snapshot of the PVC with the schedule specified in spec.schedule field of BackupConfiguration crd.

Check that the CronJob has been created using the following command,

$ kubectl get cronjob -n demo

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

pvc-volume-snapshot */1 * * * * False 0 39s 2m41s

Wait for BackupSession :

The pvc-volume-snapshot CronJob will trigger a backup on each scheduled time slot by creating a BackupSession crd.

Wait for the next schedule of backup. Run the following command to watch BackupSession crd,

$ watch -n 1 kubectl get backupsession -n demo

Every 1.0s: kubectl get backupsession -n demo

NAME INVOKER-TYPE INVOKER-NAME PHASE AGE

pvc-volume-snapshot-fnbwz BackupConfiguration pvc-volume-snapshot Succeeded 1m32s

We can see above that the backup session has succeeded. Now, we are going to verify that the VolumeSnapshot has been created and the snapshots have been stored in the respective backend.

Verify Volume Snapshot :

Once a BackupSession crd is created, it creates volume snapshotter Job. Then the Job creates VolumeSnapshot crd for the targeted PVC.

Check that the VolumeSnapshot has been created Successfully.

$ kubectl get volumesnapshot -n demo

NAME AGE

source-data-fnbwz 1m30s

Let’s find out the actual snapshot name that will be saved in the Google Cloud by the following command,

kubectl get volumesnapshot source-data-fnbwz -n demo -o yaml

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

creationTimestamp: "2019-07-15T10:31:09Z"

finalizers:

- snapshot.storage.kubernetes.io/volumesnapshot-protection

generation: 4

name: source-data-fnbwz

namespace: demo

resourceVersion: "32098"

selfLink: /apis/snapshot.storage.k8s.io/v1/namespaces/demo/volumesnapshots/source-data-fnbwz

uid: a8e8faeb-a6eb-11e9-9f3a-42010a800050

spec:

source:

persistentVolumeClaimName: source-data

volumeSnapshotClassName: csi-snapshot-class

status:

boundVolumeSnapshotContentName: snapcontent-a8e8faeb-a6eb-11e9-9f3a-42010a800050

creationTime: "2019-07-15T10:31:10Z"

readyToUse: true

restoreSize: 1Gi

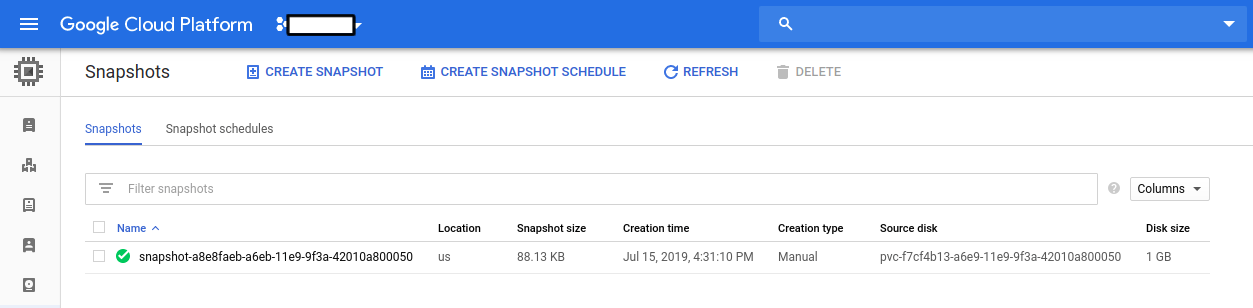

Here, spec.snapshotContentName field specifies the name of the VolumeSnapshotContent crd. It also represents the actual snapshot name that has been saved in Google Cloud. If we navigate to the Snapshots tab in the GCP console, we are going to see snapshot snapcontent-a8e8faeb-a6eb-11e9-9f3a-42010a800050 has been stored successfully.

Restore PVC from VolumeSnapshot

This section will show you how to restore the PVC from the snapshot we have taken in the earlier section.

Stop Taking Backup of the Old PVC:

At first, let’s stop taking any further backup of the old PVC so that no backup is taken during the restore process. We are going to pause the BackupConfiguration that we created to backup the source-data PVC. Then, Stash will stop taking any further backup for this PVC. You can learn more how to pause a scheduled backup here

Let’s pause the pvc-volume-snapshot BackupConfiguration,

$ kubectl patch backupconfiguration -n demo pvc-volume-snapshot --type="merge" --patch='{"spec": {"paused": true}}'

backupconfiguration.stash.appscode.com/pvc-volume-snapshot patched

Now, wait for a moment. Stash will pause the BackupConfiguration. Verify that the BackupConfiguration has been paused,

$ kubectl get backupconfiguration -n demo

NAME TASK SCHEDULE PAUSED AGE

pvc-volume-snapshot */1 * * * * true 22m

Notice the PAUSED column. Value true for this field means that the BackupConfiguration has been paused.

Create RestoreSession :

At first, we have to create a RestoreSession crd to restore the PVC from respective snapshot.

Below is the YAML of the RestoreSesion crd that we are going to create,

apiVersion: stash.appscode.com/v1beta1

kind: RestoreSession

metadata:

name: restore-pvc

namespace: demo

spec:

driver: VolumeSnapshotter

target:

volumeClaimTemplates:

- metadata:

name: restore-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "csi-standard"

resources:

requests:

storage: 1Gi

dataSource:

kind: VolumeSnapshot

name: source-data-fnbwz

apiGroup: snapshot.storage.k8s.io

Here,

spec.target.volumeClaimTemplates:metadata.nameis the name of the restoredPVCor prefix of theVolumeSnapshotname.spec.dataSource:spec.dataSourcespecifies the source of the data from where the newly created PVC will be initialized. It requires following fields to be set:apiGroupis the group for resource being referenced. Now, Kubernetes supports onlysnapshot.storage.k8s.io.kindis resource of the kind being referenced. Now, Kubernetes supports onlyVolumeSnapshot.nameis theVolumeSnapshotresource name. InRestoreSessioncrd, You must set the VolumeSnapshot name directly.

Let’s create the RestoreSession crd we have shown above.

$ kubectl apply -f https://github.com/stashed/docs/raw/v2025.6.30/docs/guides/volumesnapshot/pvc/examples/restoresession.yaml

restoresession.stash.appscode.com/restore-pvc created

Once, you have created the RestoreSession crd, Stash will create a job to restore. We can watch the RestoreSession phase to check if the restore process has succeeded or not.

Run the following command to watch RestoreSession phase,

$ watch -n 1 kubectl get restore -n demo

Every 1.0s: kubectl get restore -n demo

NAME REPOSITORY-NAME PHASE AGE

restore-pvc Running 10s

restore-pvc Succeeded 1m

So, we can see from the output of the above command that the restore process succeeded.

Verify Restored PVC :

Once the restore process is complete, we are going to see that new PVC with the name restore-data has been created.

To verify that the PVC has been created, run by the following command,

$ kubectl get pvc -n demo

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

restore-data Bound pvc-c5f0e7f5-a6ec-11e9-9f3a-42010a800050 1Gi RWO standard 52s

Notice the STATUS field. It indicates that the respective PV has been provisioned and initialized from the respective VolumeSnapshot by CSI driver and the PVC has been bound with the PV.

The volumeBindingMode field controls when volume binding and dynamic provisioning should occur. Kubernetes allows

ImmediateandWaitForFirstConsumermodes for binding volumes. TheImmediatemode indicates that volume binding and dynamic provisioning occurs once the PVC is created andWaitForFirstConsumermode indicates that volume binding and provisioning does not occur until a pod is created that uses this PVC. By defaultvolumeBindingModeisImmediate.

If you use

volumeBindingMode: WaitForFirstConsumer, respective PVC will be initialized from respective VolumeSnapshot after you create a workload with that PVC. In this case, Stash will mark the restore session as completed with phaseUnknown.

Verify Restored Data :

We are going to create a new pod with the restored PVC to verify whether the backed up data has been restored.

Below, the YAML for the Pod we are going to create.

apiVersion: v1

kind: Pod

metadata:

name: restored-pod

namespace: demo

spec:

containers:

- name: busybox

image: busybox

args:

- sleep

- "3600"

volumeMounts:

- name: restore-data

mountPath: /restore/data

volumes:

- name: restore-data

persistentVolumeClaim:

claimName: restore-data

readOnly: false

Let’s create the Pod we have shown above.

$ kubectl apply -f https://github.com/stashed/docs/raw/v2025.6.30/docs/guides/volumesnapshot/pvc/examples/restored-pod.yaml

pod/restored-pod created

Now, wait for the Pod to go into the Running state.

$ kubectl get pod -n demo

NAME READY STATUS RESTARTS AGE

restored-pod 1/1 Running 0 34s

Verify that the backed up data has been restored in /restore/data directory for restored-pod pod using the following command,

$ kubectl exec -n demo restored-pod -- cat /restore/data/data.txt

sample_data

Cleaning Up

To clean up the Kubernetes resources created by this tutorial, run:

kubectl delete -n demo pod source-pod

kubectl delete -n demo pod restored-pod

kubectl delete -n demo backupconfiguration pvc-volume-snapshot

kubectl delete -n demo restoresession restore-pvc

kubectl delete -n demo storageclass csi-standard

kubectl delete -n demo volumesnapshotclass csi-snapshot-class

kubectl delete -n demo pvc --all